AI initiatives often fail not because the model can’t work, but because the organization isn’t structurally ready to own it.

Missing infrastructure, undefined operational ownership, and incomplete data governance turn a “pilot” into an ongoing commitment.

When people’s data is involved, jurisdiction and LGPD exposure become part of the decision, along with reputational risk that is hard to reverse.

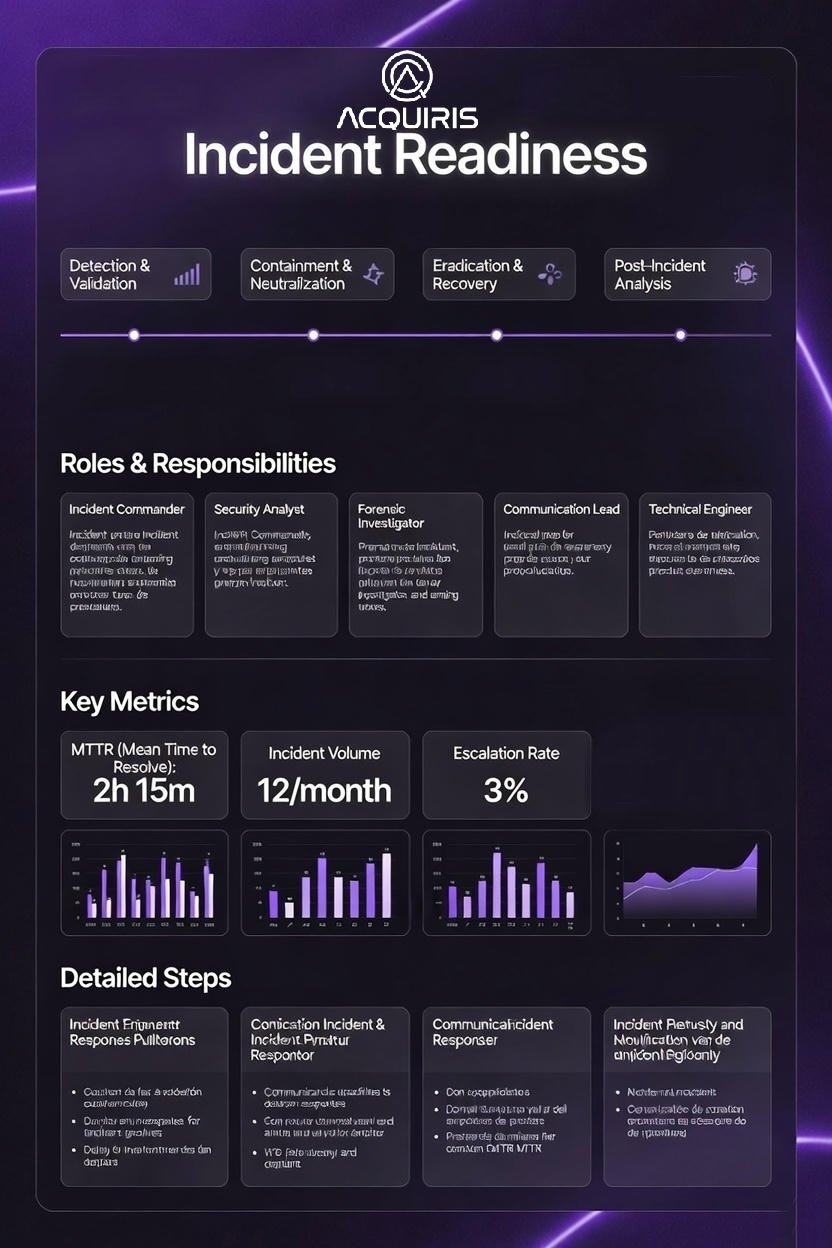

What starts as a signal of modernity can become dependency: recurring cost, support load, auditability, traceability, and incident response.

In many cases the real question isn’t “do we do AI”, but which commitment the business can sustain without distorting the operating model.

After mergers, teams can share objectives and still operate on incompatible assumptions.

In strong technical environments, the bottleneck is rarely capability, it’s the absence of minimum executable validation criteria.

Without criteria, critical work starts too early and turns into manual verification before it reaches the right experts.

Weeks disappear into coordination tax, rework, and fixes that never become enforceable boundaries.

The unlock is often a few mechanisms that define what must be true upfront — not a platform rebuild.

Vendor transitions often optimize price and promises while integration reality is evaluated late.

The contract rarely includes the hidden work embedded in the legacy: connectors, normalization, and downstream assumptions.

When outputs change shape, the customer inherits the cost to recreate consumable formats, or rewrites consumers downstream.

A defensible move is to map the integration delta pre‑signature, then normalize at the cheapest upstream point.

The mistake isn’t “switching.” It’s switching without making explicit what was actually being bought.

See the full index.